AI/ML

Minimum System Requirements for Running Qwen-2.5 Locally: Hardware & Software Specifications

Qwen2.5-1.8B

1.8B parameters, ideal for chatbots & IoT.

Qwen2.5-7B

7B parameters, strong for text and code.

Qwen2.5-32B

32B parameters, advanced reasoning & multilingual.

Qwen2.5-72B

72B parameters, top-tier for R&D & complex AI.

Download Qwen2.5-0.5B for free - Follow our Step by Step Guide here!

Problem

Want to run Qwen-2.5 on a local server, but are unsure about the hardware and software requirements needed for optimal performance. Large Language Models (LLMs) like Qwen-2.5 require high-performance CPUs, large memory and GPUs to run efficiently.

Solution

Breaking down the minimum and recommended system requirements for different Qwen-2.5 variants (7B, 14B, 72B) and providing guidelines on CPU vs. GPU performance, storage and memory needs.

1. Qwen-2.5 Model Variants and Approximate Sizes

Note: The larger the model, the more VRAM (GPU memory), RAM and disk space required.

2. Minimum & Recommended Hardware Requirements

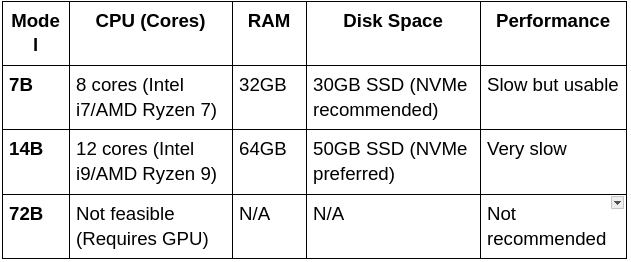

Minimum Hardware Requirements (For CPU-Only Inference)

Running Qwen-2.5 without a GPU is extremely slow and only suitable for experimentation.

Key Takeaways:

- CPU-only inference is impractical for anything beyond 7B models.

- Expect slow response times (several minutes per prompt) without a GPU..

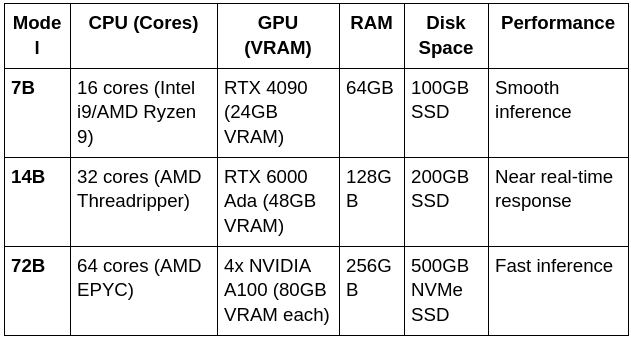

Minimum GPU Requirements (For Usable Performance)

If you want to use GPU acceleration, ensure your system meets these minimum specifications.

Key Takeaways:

- At least 24GB VRAM is needed for comfortable execution of 7B/14B models.

- FP16 and quantization can reduce GPU memory needs slightly.

- Running 72B models locally is impractical without A100/H100 GPUs.

Recommended Hardware for Fast & Efficient Inference

Key Takeaways:

- For 7B/14B models, a single RTX 4090 is sufficient.

- For 72B models, you need at least 4x A100 GPUs.

- High RAM and NVMe SSDs help speed up model loading.

3. Storage & Disk Space Considerations

Beyond just model weights, disk space is required for temporary caching, dataset processing, and logs.

Tip: If disk space is limited, consider quantized models (e.g., 4-bit versions) to reduce file sizes.

4. Operating System & Software Requirements

Tip: Always use PyTorch with GPU acceleration (torch.cuda.is_available()) to verify proper setup.

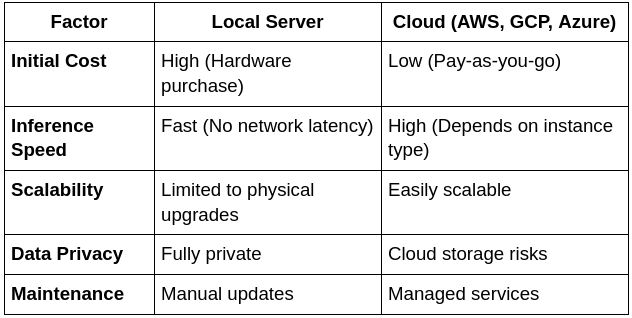

5. Performance Comparison – Local vs. Cloud Hosting

Summary:

- Cloud hosting is better for short-term use or scaling.

- Local hosting is best for long-term cost efficiency and security.

Conclusion

Running Qwen-2.5 locally requires careful hardware planning.

Key Recommendations:

- For small-scale inference (7B/14B) – RTX 4090 + 64GB RAM is sufficient.

- For large-scale models (72B) – Requires A100/H100 GPUs or a cloud setup.

- Use SSDs & optimized PyTorch settings for best performance.

Ready to transform your business with our technology solutions? Contact Us today to Leverage Our AI/ML Expertise.

Contact Us