AI/ML

System Requirements for OpenThinker 7B: Minimum and Recommended Specs

OpenThinker 7B Model for your Business?

Cost Efficiency (Open Source)

Lower Long Term costs

Customised data control

Pre-trained model

Get Your OpenThinker 7B AI Model Running in a Day

Free Installation Guide - Step by Step Instructions Inside!

Introduction

OpenThinker 7B is a large scale language model designed for efficient inference and deployment. To run this model effectively, users must meet certain hardware and software requirements based on their use case whether running on a local machine, a cloud server or within a containerized environment.

This document outlines the minimum and recommended system specifications for running OpenThinker 7B.

Hardware Requirements

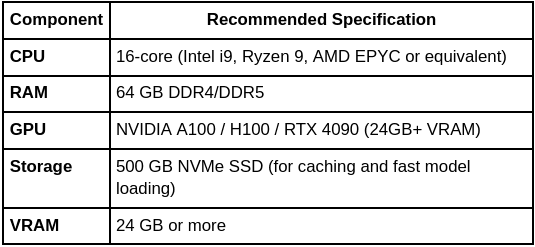

Below are the recommended specifications for optimal performance:

Minimum Hardware Requirements (For Small Scale Inference)

If you plan to run OpenThinker 7B for basic tasks or development purposes, the following specs should be sufficient:

These specs allow you to run OpenThinker 7B with reduced performance or batch processing instead of real-time inference.

Recommended Hardware Requirements (For Optimal Performance)

For full-scale inference, training or production deployment, consider these recommended specifications:

This configuration ensures real time responses, lower latency and the ability to handle larger workloads efficiently.

Software Requirements

The model requires GPU acceleration for efficient performance. If running on CPU, expect significantly slower inference times.

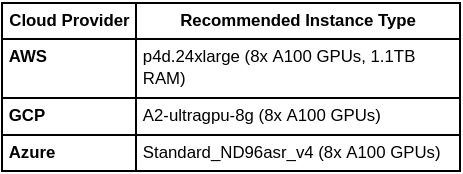

Cloud Deployment Requirements

If deploying OpenThinker 7B on cloud services such as AWS, GCP or Azure, the following instance types are recommended:

For smaller cloud deployments, you can use T4 GPUs (AWS g4dn, GCP T4, Azure NCas_T4_v3) but expect slower inference speeds.

Performance Benchmarks

Cloud based deployments with A100/H100 GPUs provide the best performance for production use cases.

Conclusion

Key Takeaways:

OpenThinker 7B requires at least 16GB RAM & a GPU with 6GB VRAM for basic operations.

For optimal real time inference, 24GB+ VRAM (RTX 4090, A100, H100) is recommended.

Deployment in cloud environments (AWS, GCP, Azure) benefits from A100 GPUs for high performance workloads.

Containerized deployments with Docker and Ollama provide an efficient way to run OpenThinker 7B across different systems.

By meeting the recommended requirements, you can ensure a smooth experience with OpenThinker 7B while maximizing efficiency and model performance.

Ready to transform your business with our technology solutions? Contact Us today to Leverage Our AI/ML Expertise.

Contact Us